One of the coolest new features in ArubaOS 8 is the ArubaOS 8 Cluster. The first real Cluster for WLAN infrastructures. This post shows how simple the configuration of the ArubaOS 8 Cluster is.

ArubaOS 8 Cluster: Requirements and Features

To use the ArubaOS 8 Cluster you need to run at least AOS version 8.0. In the examples below, I use 8.4.0.1.

I also recommend making sure, that the time between all cluster members is synchronized and that you use only models of the same controller type in one cluster. The Mobility Master cannot be part of the cluster. Only Managed Devices (MD) can build a cluster.

The 70xx and the virtual controller can create a cluster of up to 4 devices and the 72xx controller of up to 12 devices, as of today. If you need to terminate Remote AP’s (RAP’s) on the cluster do not use more than 4 cluster member.

So, but what benefits do I have, if I use an ArubaOS 8 Cluster. First of all, load balancing. All clients and all AP’s are balanced in the cluster. I will show you the details below.

Secondly, seamless roaming. As each client is terminated on a dedicated controller, chosen with the help of the load balancing algorithm, roaming from one AP to another does not affect this. So the termination point for the client stays always the same.

And third, hitless failover. For every AP and every Client, a primary and secondary controller is defined. In the case of a failure of the primary controller, the secondary controller will take over. Using this feature, we can provide a new upgrade procedure without loss of client connectivity. But this is part of a future post.

ArubaOS 8 Cluster: Configuration

The configuration is very simple. As always, I will show the basic steps. There is always room for more specific configurations.

I assume, that the MD’s are already known and connected to the MM. In my case, I have two virtual controllers.

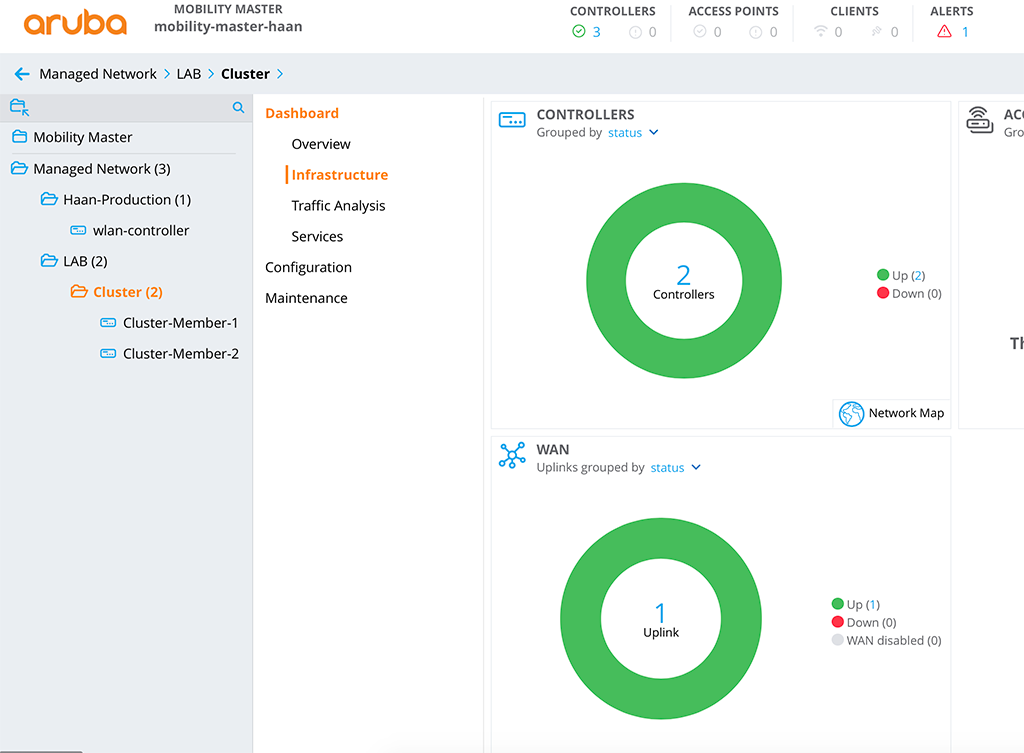

Let’s start with the configuration. Just connect to the MM and start the configuration on the group just above the two controllers. In my case, this is a group called ‘Cluster’:

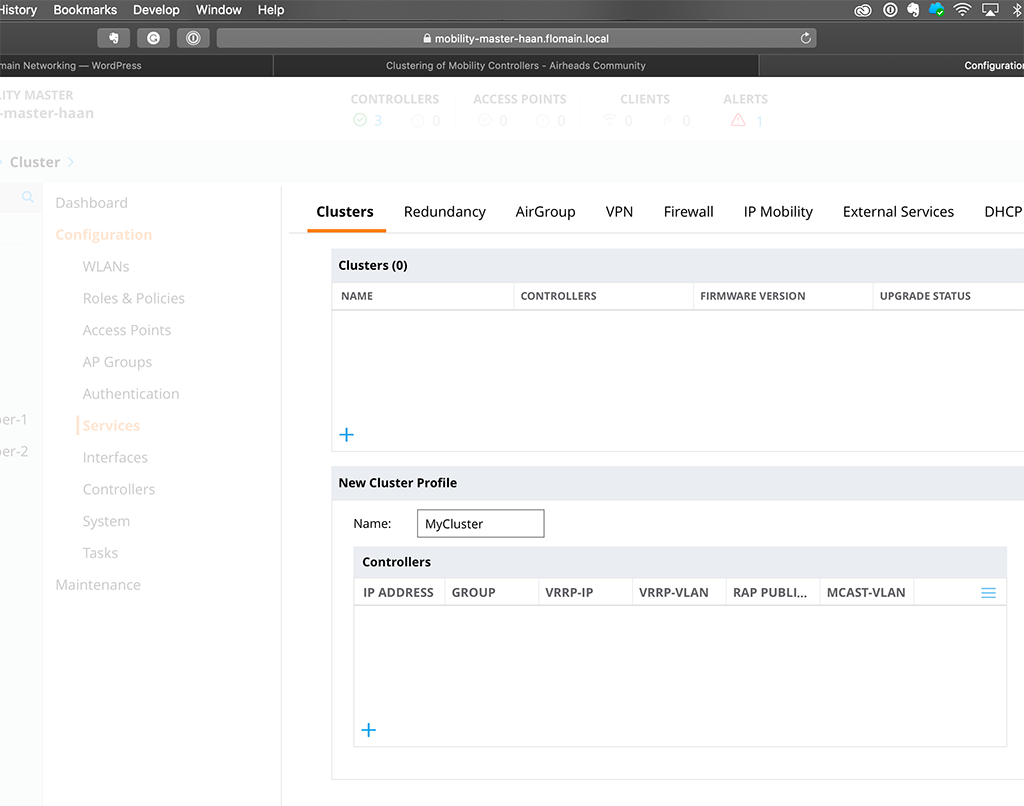

First, go to ‘Configuration–>Services–>Cluster’ and hit the ‘+’ button in the ‘Clusters’ table:

This creates a new ‘Cluster Profile’. Just give it a name and start adding controllers by hitting the ‘+’ button in the ‘Controllers’ table:

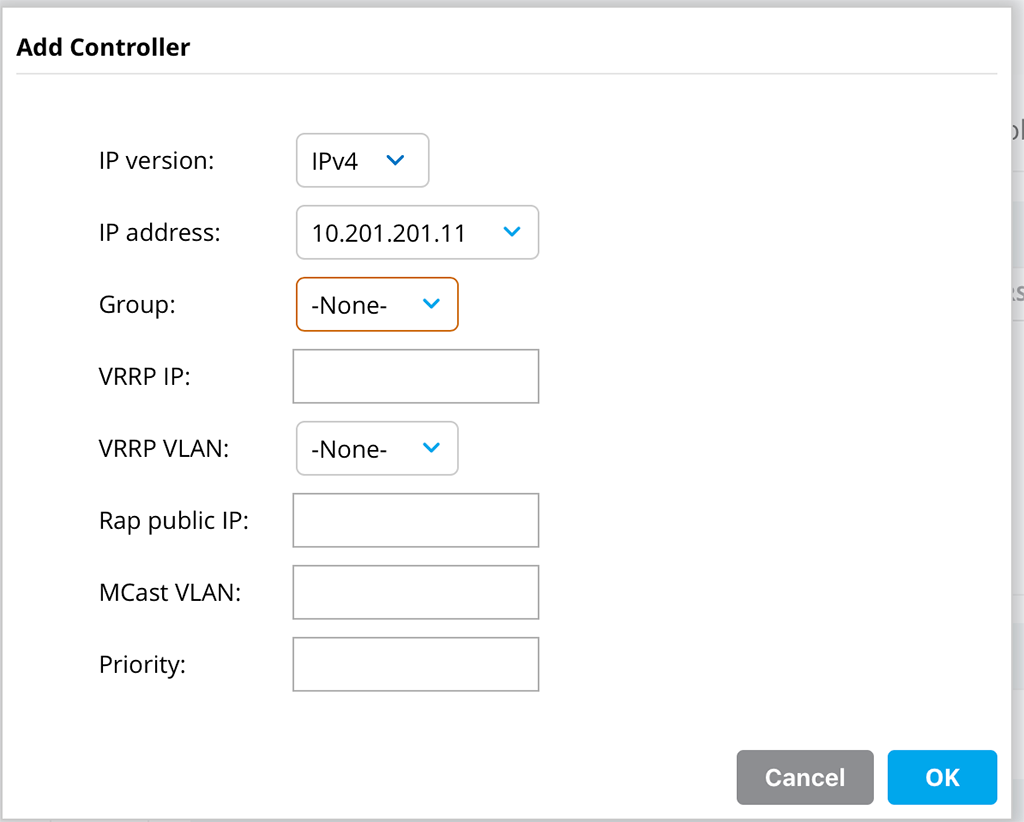

For this basic setup, I only select the ‘IP version’ for ‘IPv4’ and select the ‘IP address’ of one of the controllers. The other options are not needed for the easy setup.

For a setup, which is not basic, you can use the ‘Group’, to define controllers in different zones in your datacenter. Let’s assume, you have two availability zones in your datacenter. You can put controllers in both zones and form a cluster out of those controllers. The problem, the cluster is not aware of your availability zones. With the help of the ‘Group’ option, you can specify those zones and the cluster will make sure, that the primary controller for an AP and Client is part of one group, whereas the secondary controller is part of a different group and therefore in a different availability zone.

The VRRP stuff here is only needed of you need to work with Radius CoA. I will cover this in a separate post.

‘Rap public IP’ is needed if you terminate RAP’s on the cluster and if the cluster itself does not have public IP’s, but can be reached through NAT.

With the help of the ‘Priority’ field, you can define which controller should be the cluster leader. For most setups, it is not important, who the cluster leader is.

Add all of your controllers, which should be part of the cluster. Afterward, save the configuration.

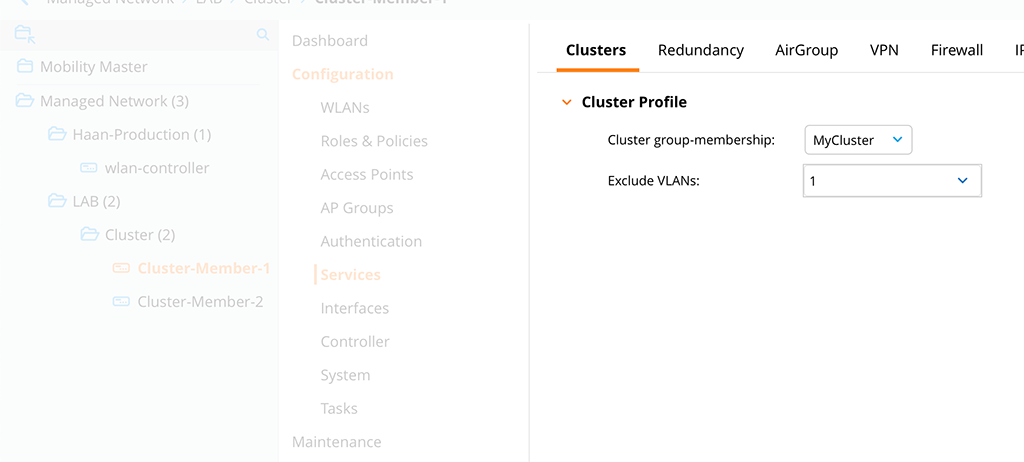

Now, move from the group to the controller itself, but stay in the same configuration menu:

On the controller hierarchy level, you select the created ‘Cluster Profile’ from above in the ‘Cluster group-

The last part is very important, as all cluster members will probe each VLAN for connectivity. If they do not see each other on every VLAN, except those configured here, they will only form

Save the configuration and do this for all cluster members.

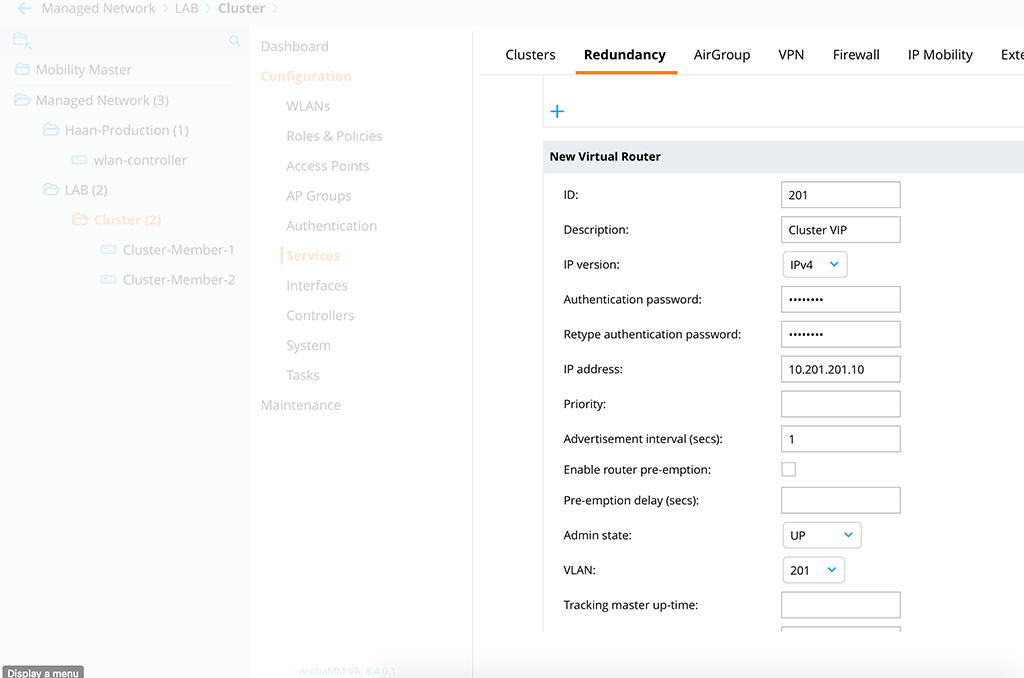

The last step, for the basic setup, is to create the cluster VIP. This is a normal VRRP configuration. Go up again, to the group hierarchy level and go to ‘Configuration–>Services–>Redundancy–>Virtual Router Table’ and create a new VRRP entry:

This is a default VRRP setup. If you have set a priority for the cluster leader, it would make sense to create the VRRP ‘Priority’ accordingly. Save the configuration.

Now we can start checking if everything works as expected. First, let’s check the cluster setup:

(Cluster-Member-1) #show lc-cluster group-membership

Cluster Enabled, Profile Name = "MyCluster"

Redundancy Mode On

Active Client Rebalance Threshold = 50%

Standby Client Rebalance Threshold = 75%

Unbalance Threshold = 5%

AP Load Balancing: Enabled

Active AP Rebalance Threshold = 50%

Active AP Unbalance Threshold = 5%

Active AP Rebalance AP Count = 30

Active AP Rebalance Timer = 1 minutes

Cluster Info Table

------------------

Type IPv4 Address Priority Connection-Type STATUS

---- --------------- -------- --------------- ------

self 10.201.201.11 128 N/A CONNECTED (Leader)

peer 10.201.201.12 128 L2-Connected CONNECTED (Member, last HBT_RSP 19ms ago, RTD = 1.001 ms)(Cluster-Member-2) #show lc-cluster group-membership

Cluster Enabled, Profile Name = "MyCluster"

Redundancy Mode On

Active Client Rebalance Threshold = 50%

Standby Client Rebalance Threshold = 75%

Unbalance Threshold = 5%

AP Load Balancing: Enabled

Active AP Rebalance Threshold = 50%

Active AP Unbalance Threshold = 5%

Active AP Rebalance AP Count = 30

Active AP Rebalance Timer = 1 minutes

Cluster Info Table

------------------

Type IPv4 Address Priority Connection-Type STATUS

---- --------------- -------- --------------- ------

peer 10.201.201.11 128 L2-Connected CONNECTED (Leader, last HBT_RSP 11ms ago, RTD = 0.988 ms)

self 10.201.201.12 128 N/A CONNECTED (Member)This looks great. We have a L2 connected cluster. Exactly what we were looking for. Now, let’s check the VIP:

(Cluster-Member-1) #show vrrp

Virtual Router 201:

Description Cluster VIP

Admin State UP, VR State BACKUP

IP Address 10.201.201.10, MAC Address 00:00:5e:00:01:c9, vlan 201

Priority 100, Advertisement 1 sec, Preemption Disable Delay 0

Auth type PASSWORD, Auth data: ********

tracking is not enabled(Cluster-Member-2) #show vrrp

Virtual Router 201:

Description Cluster VIP

Admin State UP, VR State MASTER

IP Address 10.201.201.10, MAC Address 00:00:5e:00:01:c9, vlan 201

Priority 100, Advertisement 1 sec, Preemption Disable Delay 0

Auth type PASSWORD, Auth data: ********

tracking is not enabledThe cluster VIP is up and running as well. We need the cluster VIP during the next steps. Next steps? Actually, the cluster is up and running, but I would like to show you some additional stuff as well. So let’s connect AP’s, or at least one AP.

ArubaOS 8 Cluster: Connecting AP’s and Clients

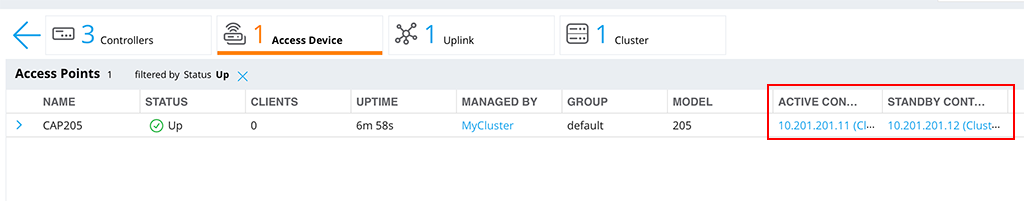

To connect AP’s to the cluster, you can use the cluster VIP. In my case, I use DNS discovery for the controller. The ‘

This also corresponds to the CLI output:

(Cluster-Member-1) #show ap database

AP Database

-----------

Name Group AP Type IP Address Status Flags Switch IP Standby IP

---- ----- ------- ---------- ------ ----- --------- ----------

CAP205 default 205 10.200.200.10 Up 5m:34s 2 10.201.201.11 10.201.201.12

(Cluster-Member-1) #show lc-cluster load distribution ap

Cluster Load Distribution for APs

---------------------------------

Type IPv4 Address Active APs Standby APs

---- --------------- -------------- ---------------

self 10.201.201.11 1 0

peer 10.201.201.12 0 1

Total: Active APs 1 Standby APs 1(Cluster-Member-2) #show ap database

AP Database

-----------

Name Group AP Type IP Address Status Flags Switch IP Standby IP

---- ----- ------- ---------- ------ ----- --------- ----------

CAP205 default 205 10.200.200.10 Up 11m:27s 2S 10.201.201.11 10.201.201.12

(Cluster-Member-2) #show lc-cluster load distribution ap

Cluster Load Distribution for APs

---------------------------------

Type IPv4 Address Active APs Standby APs

---- --------------- -------------- ---------------

peer 10.201.201.11 1 0

self 10.201.201.12 0 1

Total: Active APs 1 Standby APs 1The AP is connected to both controllers, without any configuration, except the cluster configuration from above.

In this case, the ‘Cluster-Member-1’ is the AAC, which means ‘AP Anchor Controller’ and the ‘Cluster-Member-2’ is the S-AAC, which mean ‘Standby AP Anchor Controller’. If the AAC fails, the AP will work with the S-AAC, becoming the new AAC for this AP. If I would have more than two controllers in the cluster, the AP will even learn a new S-AAC.

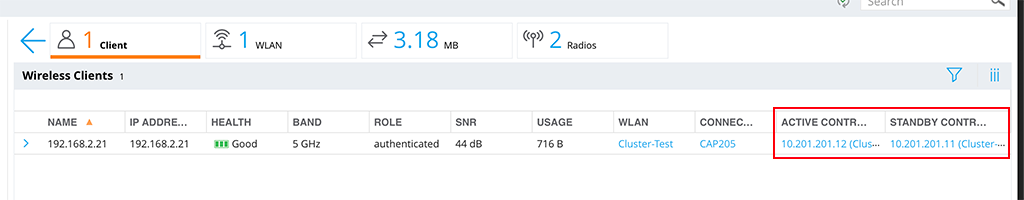

The same is true, for the clients. They are also balanced through the cluster:

In this case, the Client is connected to a different controller than the AP. So real load balancing here. The CLI will give you the same output:

Cluster-Member-1) #show aaa cluster essid-all users standby

Dormant Users

-------------

ESSID BUCKET MAC IP Active UAC Standby UAC

----- ------ ----- ---- ---------- -----------

Cluster-Test 69 60:f4:45:0b:28:66 192.168.2.21 10.201.201.12 10.201.201.11(Cluster-Member-2) #show aaa cluster essid-all users

Active Users for ESSID : Cluster-Test

--------------------------------------

BUCKET MAC IP Active UAC Standby UAC

------ --- -- ---------- -----------

69 60:f4:45:0b:28:66 192.168.2.21 10.201.201.12 10.201.201.11In this case, the ‘Cluster-Member-2’ is the UAC, the ‘User Anchor Controller’ and the ‘Cluster-Member-1’ is the S-UAC, the ‘Standby User Anchor Controller’. If the UAC fails, the same procedure applies as above with the AP. The user fails over to the S-UAC, which becomes the UAC. If I would have more than two controllers in the cluster, even a new S-UAC will be assigned.

Conclusion

As you see from the above post, the setup of an ArubaOS 8 Cluster is very easy. There is no need for any special configuration and it works out of the box. Sure, you can tweak more stuff, as always. but for a basic setup, this is not needed.

My recommendation for all implementations is to use a controller cluster if you start from scratch or if you migrate to from AOS 6.x to AOS 8.x.

If you find this post useful, leave me a comment and share it with your friends. If you don’t like the post, leave me a comment and tell me what you don’t like. But whatever you do, leave me a comment.

Very good article. Im having issues with my Mobility Controller cluster as APs register only to the standby controller not to the active controller. Software codes Im using are AOS 8.5.0.4 on MCs and 8.5.0.5 on MMs. Im going to downgrade to 8.4.0.1 code across all MCs and MMs at the moment as the Aruba TAC unable to find the root cause. Do you have any tips on the software code version issues on Controller Cluster managed by mobility master setups?

Hi Dinesh,

thanks for the comment. Really appreciated your feedback.

I’m not sure if I get your question right. You are running a Cluster setup where the AP’s will only register to the standby controller, but not to the active controller? I’m not sure, if this is even possible, as the AP will first register with the AAC before he will try to reach S-AAC.

From my experience, I would not downgrade to 8.4.x. If you need the features from 8.4 and beyond or you need support 802.11ax AP’s I would go with 8.5.x or even 8.6.x (for production environments 8.5.x, at least at the moment).

Have you looked through the release notes of 8.5.0.5 if your problem is mentioned there?

BR

Florian

Nice write up. This is exactly what I was looking for and will be implementing soon. Temporarily using Instant while I configure my 2 controllers as described here.

Hi Chris,

Thanks for the feedback. Really appreciated.

BR

Florian

Hi Florian,

Do you need the cluster VIP on the same network as the management addresses of the controllers?

Or what does the configuration look like if the addresses of the APs and controllers are in different networks?

Hi Maurice,

The cluster VIP is primarily used as the go-to address for CAP’s without configured controller IP’s. So it is the IP you would use for AP discovery (either DNS or DHCP based). Taking this into account, This IP can also reside on a different VLAN.

BR

Florian

Hi Flo,

excellent post, easy to follow 😉

my question: in many cluster setups, it is recommended to make members visible to each other in a disjunctive fashion to avoid split brain scenarios.

how can we achieve this in a two member setup w/o MM ? my idea would be : raise a dedicated vlan, bind to a

dedicated physical link between members, make sure the new vlan is not excluded in cluster profile?

Hi Marco,

Just thinking, what will happen if we have a split-brain situation within the cluster and what could go wrong to get into this state. Let’s assume, the controllers will not see each other but they will see the AP’s. And Let’s assume we only have two cluster members, to take it simple.

If the split-brain occurs both controllers will send an updated node list to the AP, containing only the available controllers. In our case, controller 1 will send a new node list with only controller 1 in the list and controller 2 will do the same, but with controller 2 in the list.

Depending on the timing, so which update will arrive the AP at first, will update the node list within the AP and the tunnel to the removed controller will be torn down.

The AP will only have a connection to one of the two controllers.

so I do not consider this as an issue.

But from my point of view, this scenario is quite rare. Most of the time, controllers are connected to switches and if the connection between controllers is down, it means that the controller itself is also offline, at least for most of the scenarios I’m aware of.

Hope this helps.

BR

Florian